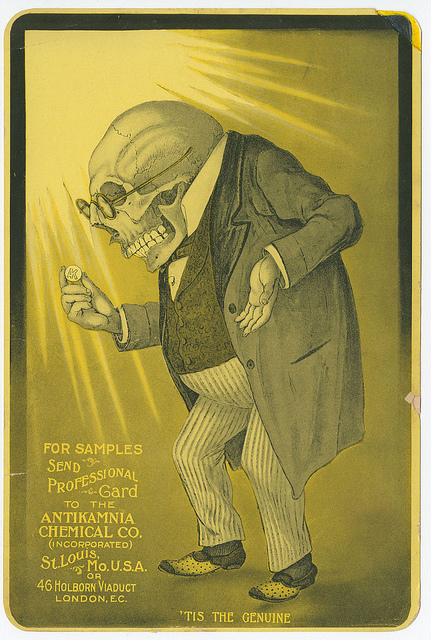

flickr photo shared by Library Company of Philadelphia with no copyright restriction (Flickr Commons)

What I wanted to do was grab data from the WordPress API and use that to provision chunks of my new portfolio site. The portfolio is hosted on GitHub and GitHub is HTTPS. At the moment my bionicteaching site is not HTTPS.1 That causes problems as secure and insecure are not friends. I wanted a quick and easy solution so I could continue until I do the HTTPS switch.

The following is how I wandered towards a solution. A number of the things worked but don’t quite work for what I wanted. So they’re worth remembering/documenting for later and it’s kind of fun to see a mix of javascript, php, url manipulation, Google API, and the WordPress V2 API all in one little bit of wandering.

My first thought was to grab the JSON via a Google Script and store it in Google Drive. I can do that but can’t seem to make it available for use the way I want. I tried messing with various URL parameters but it wasn’t flowing and I only started there because I thought it would be easy.

var urlOfTheJson = 'http://bionicteaching.com/wp-json/wp/v2/posts/?filter[category_name]=photography&filter[posts_per_page]=30';// URL for the JSON

var folderId = 'gobbledygookthatyouwantoreplace';//folder ID

function saveInDriveFolder(){

var folder = DriveApp.getFolderById(folderId);// get the folder

Logger.log(folder.getFoldersByName);

var file = UrlFetchApp.fetch(urlOfTheJson); // get the file content as blob

Logger.log(file);

folder.createFile('weeklypics.json',file);//create the file directly in the folder

}

I did eventually get the file accessible in DropBox (the only other place I could think of immediately for https file storage) but setting up OAuth etc. to automate putting it there seemed more hassle than it was worth for a short-time solution. For the record, you can make a JSON file accessible for this kind of use. To do so, get your DropBox share URL (the one from the link icon) and change the first portion as shown below.

https://www.dropbox.com/s/0snpxj1b6wpe267/weeklypics.json?dl=0 //doesn't work https://dl.dropboxusercontent.com/s/0snpxj1b6wpe267/weeklypics.json?dl=0 //works

With a tiny bit more thought, I opted to use my local development environment (Vagrant) to run a simple PHP file (below) which’ll save the JSON files to the directory that’s already synched to GitHub.

<?php

$urls = [

"http://bionicteaching.com/wp-json/wp/v2/posts/?filter[category_name]=photography&filter[posts_per_page]=30",

"http://bionicteaching.com/wp-json/wp/v2/posts/?filter[tag]=javascript&filter[posts_per_page]=10",

"http://bionicteaching.com/wp-json/wp/v2/posts/?filter[tag]=angular&filter[posts_per_page]=10",

"http://bionicteaching.com/wp-json/wp/v2/posts/?filter[tag]=plugin&filter[posts_per_page]=10",

"http://bionicteaching.com/wp-json/wp/v2/posts/?filter[category_name]=wordpress&filter[posts_per_page]=10"];

$fileNames = [

"weeklyPhotos",

"javascriptPosts",

"angularPosts",

"wpPluginsPosts",

"wordpressPosts"

];

for ($x = 0; $x < count($urls); $x++){

$url = $urls[$x];

$file = file_get_contents($url);

file_put_contents('woodwardtw.github.io/json/'.$fileNames[$x].'.json', $file);

}

?>

1 It is not even https