I ran a AI prompting basics workshop this past Friday.1 The slides are here and there are way too many examples.

It led me to try lots of things. This is roughly what we did in the workshop.2 The images were generated via Copilot with a 1950s comic book prompt I’ve used pretty consistently. Amy helped me out by putting the prompts in the Zoom chat so people could easily duplicate the exact prompt we were using.

What I ended up doing with the participants was to start with prompt requesting a three line poem about a fish.3 Poems are short enough and interesting enough to keep people’s attention. They also have a mix of creative elements and, depending on your request, mechanical/facutal components (rhyme scheme, poem structure, subject, etc.).

Then we request the poem again, and again. The point here just being that the poem would be different each time (unless you’re using Bing, which seems irritated you ask the same question twice in a row). The three line poems also tended not to rhyme, although that’s inconsistent. If they do rhyme, it’s almost universally AAA.

Next we’ll ask for a four line poem. These tend to be either AABB or AAAA rhyme schemes. The point being that small prompt changes can cause interesting changes in responses- in this case rhyme schemes. This isn’t an A+B=C scenario. It’s a “season to taste” scenario. That’s weird for computers.

Anyway, I got this four line poem.

In the river’s flow, a fish’s tale untold,

Glistening scales, a sight to behold.

Through sunlit ripples, it gracefully sways,

In tranquil depths, where secrets lay.

AABB rhyme scheme. When I asked for a syllable count, I got the following . . . which is wrong and it’s not like computers can’t count syllables pretty well. Most of the participants in the workshop got incorrect counts as well.

1st line: 10 syllables

2nd line: 9 syllables

3rd line: 9 syllables

4th line: 8 syllables

You can see another failed attempt of syllable counting here in Gemini rather than ChatGPT. This example comes complete with the Python code used which makes it seem extra official. What’s odd is that you can get it to count correctly if you tell it that it’s wrong (towards the bottom). That puts the LLM in a weird place where it might be able to do something, but will still do it incorrectly . . . or do it right sometimes and wrong sometimes. Sometimes it says it can’t do something while doing it. You can see me try really, really hard to get an ABAB rhyme scheme using all sorts of AI prompt tricks here. I have yet to succeed there. One of the things I tried to stress is that if you can’t evaluate the output of the LLM, then you really have no idea what it’s giving you and it’s going to give it to you with full confidence.4 In a lot of ways it’s my dream of an unreliable narrator. I don’t think that’s what most people are hoping for though.

Personally, I’m also struggling to find the various examples I’ve created. Some of that is because I start with one chat topic and drift to another. That’s a bad practice given how chats end up being titled. Both Gemini (free version), and ChatGPT (upgraded) seem to see these chats as pretty ephemeral. That makes sense in certain ways and may change, but in the meantime, I need to add a layer of structure so I can find these things more easily. It matters as I start to collect examples for workshops and, if I was using this more methodically in a job, I’d want to be able to easily access my go to patterns. ChatGPT does better than Gemini as I’ve got a pretty consistent history even if it’s poorly labeled and not searchable across chats. My old Gemini stuff seems to be gone now. I can access the public chats I shared, but it looks like the history duration is pretty limited. Live and learn. There do seem to be some third-party solutions for this in ChatGPT.

So I end up comparing LLMs to young golden retrievers- eager to please but not entirely reliable. They’ll fetch something. It may or may not be the stick you threw. They will be happy as they do it and be pretty confident it’s the right stick . . . even if it ends up being a slobber-covered ball rather than a stick.

The other thing I tried to stress was the LLM and AI are vague terms and the experiences within various products will vary dramatically. Paid versions vs free versions will definitely differ. Microsoft’s Copilot will integrate with MS products in very different ways that Google’s Gemini. Colin Fraser has a good post getting into the AI naming issue (in addition to a number of other important points). I didn’t go into the fact that MiddJourney has an entirely different set of commands that work in conjunction with the LLM-side of things. That interface being in Discord and being closer to terminal command prompts with flags etc. and now having different interface-like buttons built in . . . is odd. Yet it’s all put in the same bucket in most conversations.

Just to set the stage, I listed a few of the more popular LLMs and their companies. I stressed the change in names with Google’s Bard/Gemini and the fact that Copilot is built on ChatGPT 4 and DALL-E with a layer of Microsoft on top. It’s a bit messy and in flux.

It’s further complicated in good and bad ways by a whole lot more AI models. I talked a bit about Hugging Face and how there are so many different models out there built do really specific and odd things.

That culminated with the example of MonadGPT (which I found via Res Obscura). This LLM is a finetune of Mistral-Hermes 2 on 11,000 early modern (17th century) texts in English, French and Latin, mostly coming from EEBO and Gallica. I compared its response to my question about healing my witch-cursed cow with ChatGPT’s version (which was actually better than I expected).

I just really wanted to show that an LLM might be really different from the mass marketed versions that most people think of. The LLM might be explicitly anti-productivity and more about odd experiential explorations of data sets and experiences that don’t lead to some clearly identified learning objective. Maybe it just amuses you or helps generate interest to explore the source data. LLM as gateway drug for the raw source.

I then walk them through a prompt across Copilot, ChatGPT 4, and ChatGPT 3.5. The point being that Copilot (running on GPT4) provides one summary with multiple sources, GPT4 provides one source, and GPT3.5 says I don’t know current news. It should bring up questions about what financial relationships lead to being a “Microsoft Start Partner” and why GPT only cites UPI. It also points out how experiences between paid and free models can be very different in terms of capability.

My general guidelines for prompts are to do all the stuff your 9th grade English teacher told you to do when writing a paper. I compared it to the old assignment where you had to write a step-by-step of some simple task like making a peanut butter sandwich. Then you had to perform the task in front of the class. Inevitably, you’d forget a step like “put the jar down” and the whole class would call you on it amongst much mayhem.5

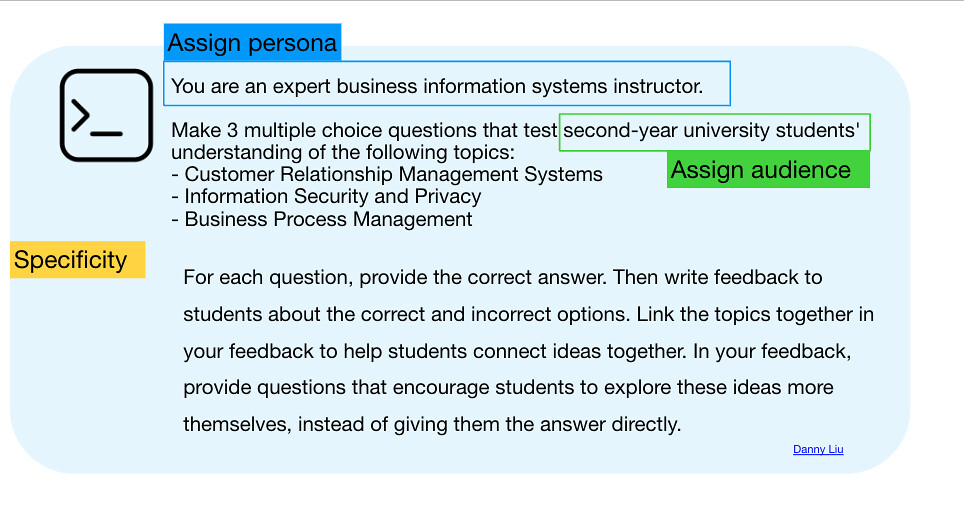

Then I go through a bunch of different prompting patterns. They’re in the slides with links to examples in various LLMs. It seems boring to write them here.

I did try to diagram out how you can combine prompting techniques to get better results.

That led me to thinking a bit more about how a prompt library that offered both the economy of having prompts to copy or join in on could be mixed with annotation that shows the concepts implemented in the prompt itself. I haven’t really worked out the structure/terms completely but something like this. You’d then be able to collect examples under particular concepts and do whatever other taxonomic/categorical stuff you want.

See the Pen

prompt breakdown example by Tom (@twwoodward)

on CodePen.

Amy finished things off by talking about LLMs in your class and with students.

I hope some line was walked between explaining what LLMs are and how they work with enough critical analysis to provide some caution without coming on so strong I get ignored as too negative. LLMs and AI are big enough that I can’t be pro or con. I like certain potentials. I could have some real fun with aspects of these massively different technologies. I could also worry about so many things. I think Gardner’s old bag of gold analogy applies in lots of ways. Unfortunately, what we have learned is that if there is a bag of gold, capitalism will sell you plenty of high-priced, addictive, radioactive-lead-asbestos, gold-like™ items created by destroying the most beautiful areas in the world using slave labor. Soon no one will remember what real gold even looks like or that we didn’t have to do it this way or that most of the problems were created by pure greed. The companies will then charge us for rehab.

1 Just like everyone else.

2 Eh, presentation? I didn’t really give enough time for individual exploration. I’ll do better next time.

3 I always choose fish as my subject after an unfornate Brady Bunch (it was the most wholesome thing I could think of in the moment) image result many years ago.

4 Except when it throws in a footnote that the data is for display purposes only.

5 Or maybe that was just my experience.

I am re-using a lot of your exercises as part of a wider workshop we are doing with our Faculty of Science tomorrow. (With very explicit attribution.) It will be my first time doing something like this and I am really grateful you shared. I am also quoting your lines about the golden retriever, and the bag of gold. I hope this lavish borrowing is cool with you.

Ha ha . . . man, I’m thrilled to have you use any part or all of it. Do whatever you’d like regarding attribution. I’m not worried about it. Always makes me happy that the stuff is worth something to someone.

You’ve always been so generous, I was kind of counting on that. I literally used copies of some slides — like the “robot holding peanut butter jar” one. That example, and pretty much all of what I used seemed to go over pretty well. Thanks again for sharing this, and so much else of your recent practice. I’ve been feeling a bit overwhelmed at times lately and it is so helpful to be able to draw on the thoughtful work that you are doing.