creative commons licensed ( BY-SA ) flickr photo shared by nojhan

Alice Campbell in the VCU library hosted a Wikipedia edit-a-thon today. It was interesting and we had a variety of faculty and even some students show up.

Gardner joked at one point whether we had a leader board for edits. It got me thinking. I remembered that Wikipedia keeps track of the edits of logged in users and I figured I’d take a shot at scraping some of that data so we’d have a rough idea of how many edits were made by our group.

I started off by looking at the contributions page. This URL will get you the page for my user name.

https://en.wikipedia.org/wiki/Special:Contributions/Woodwardtw

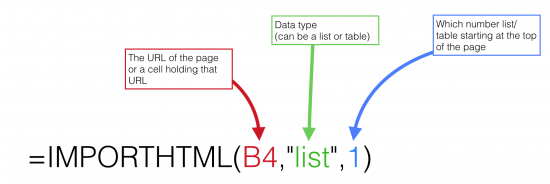

I used the IMPORTHTML formula in Google Spreadsheets.1

It was easy because this was the first list on the page. You can see in the image above that you have the choice between trying to grab a list or a table. The other variable is what number that element is from the top of the page.

You can see the working document embedded below.

I considered parsing out2 the ..(+30)..3 but after talking to Alice that wasn’t the kind of data that would travel well. She was more interested in number of edits which, as it turns out, is available on the Edit Count page that’s linked at the bottom of the Contribution page.

https://tools.wmflabs.org/xtools-ec/index.php?user=Woodwardtw&project=en.wikipedia.org

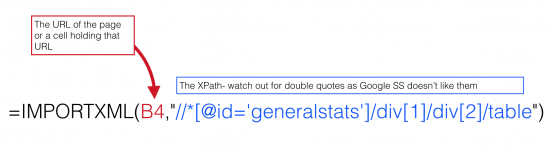

This page is a little more complicated but easy once you know a few things. The IMPORTHTML option gets confused on a page as dense as this one. Your new friend is IMPORTXML because it lets you define an XPath to your data. While XPath syntax feels easier to me than some of the regex stuff it can still be a pain.

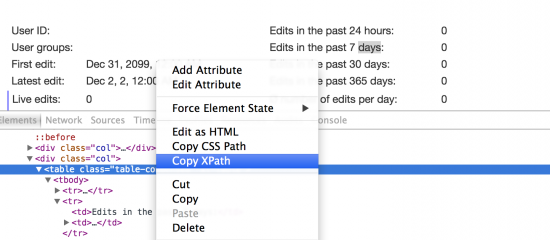

Luckily, Chrome has a handy shortcut. Choose the area with data you want to import then right click and choose Inspect Element. When the window showing the HTML opens you can right on the HTML containing your data and choose Copy XPath

In my case I get this //*[@id=”generalstats”]/div[1]/div[2]/table which becomes the second variable in my IMPORTXML formula once I replace the double quotes surrounding generalstats with single quotes.

That results in the spreadsheet embedded below.

That results in the spreadsheet embedded below.

You want to look out for capitalization issues with Wikipedia usernames. They seemed to cause some problems on both pages but were worse on the WMFLabs pages. Those pages also seem to be finicky at times. I’m not sure why so this particular use isn’t something I’d bet my life on but it does get you some information pretty quickly. I doubt many people will be looking for Wikipedia data of this sort anyway but the basic premise has some promise and ought to be pretty widely applicable to other things.

One thought on “Scraping Wikipedia User Data w Google Spreadsheets”

Comments are closed.